System-/integration-tests of embedded devices: When does automation make sense?

Introduction

Verifying the functionality of a comprehensive product is a vital part of the development process.

For products combining hardware and software, this verification is often done manually. Automated systems and integration tests with hardware-in-the-loop (HIL) can significantly reduce the workload and are a key component of the Testing Trophy (see link to Testing Trophy).

This article explores the benefits of automated testing and highlights key considerations for maximizing its effectiveness. However, it does not delve into specific technologies in detail.

When should automated tests be used in development?

Verification

No one enjoys running numerous manual tests for every new software version. The idea of automating this process is appealing, but expecting immediate time savings can be misleading.

In many projects, developing and maintaining a robust test environment often surpasses the effort required for manual testing.

To justify this investment, it is crucial to use the test environment beyond verification, especially in the following scenarios:

Regression Tests

Early detection of regressions (when a new feature disrupts an existing one) is invaluable.

Fixing bugs is easier when developers know which changes caused them. Additionally, it minimizes surprises during final verification and reduces the need for extra verification rounds.

A subset of existing tests should be executed with every pull/merge request. Here are some tips:

- Ensure tests run in a few minutes to avoid slowing down development.

- Schedule overnight or weekend test runs to ensure regular execution.

- Categorize tests early to streamline execution at different stages.

Supporting Development

Ideally, the test framework is used during development to test new features parallel to their implementation.

Software is often divided into modules, with low-level modules developed first and later integrated into high-level modules by another developer.

How can low-level modules be tested before integration?

Unit tests often fall short as they don't involve hardware. Without automated integration tests, developers resort to temporary test code, which is removed after implementation.

Manual testing of the complete functionality (integration of low and high-level modules) can be optimized with automated integration/system tests.

A well-designed test framework encourages developers to write tests during implementation, replacing temporary test code with permanent integration tests. Instead of repetitive manual testing, corresponding system/integration tests are created.

A third party can develop black box tests based on specifications. If specifications are insufficient, the developer can write white box tests during feature implementation to ensure high test coverage.

Requirements for a Test Framework

Stability

Stability in automated tests is critical and complex. Errors can arise from firmware upload issues, USB connection problems, host updates, timing changes, and other causes.

Unstable tests lead to repeated failures during verification. They are often re-run with the justification that “the test usually works” until the desired result is achieved.

With regression tests, developers may lose confidence in the results, dismissing errors as connection issues. This can lead to overlooked new errors and an increase in faulty tests.

Test Runtime

Short test runtimes allow more tests to be executed per pull request or overnight.

Quick test runtimes positively impact the motivation to use the test framework during development. If manual tests are faster, developers may avoid implementing and running automated tests.

Usability

A user-friendly test framework encourages developers to implement tests quickly and easily.

If automating tests is cumbersome, developers might revert to manual testing.

Test Coverage

High automated test coverage is desirable as untested software components require manual verification.

Solution Approaches

Test Setup

A detailed setup ensures the DUT (Device Under Test) is always in the same state before each test.

- Tasks may include:

- Firmware verification

- DUT restart

- Database reset

- Battery status check

- Error log reset

- Motor reset, etc.

While a detailed setup increases test stability, it negatively impacts runtime.

A quicker, less detailed setup can be a compromise, suitable for development where short test runtimes are prioritized over maximum stability.

The setup must work under all circumstances, even if faulty software puts the DUT in undesirable states. The test host must handle tasks like downloading new firmware or restoring the database if needed.

Automated Hardware Control

With sufficient sensors, actuators, and mechanics, almost anything can be automated. However, this requires significant effort and may affect test stability.

For example, using a camera to check a display introduces another potential fault source (connection issues, camera crashes, etc.).

Careful consideration is needed to determine the value and feasibility of hardware automation.

- Can critical points be tested without hardware automation?

- Can time-consuming manual tests be replaced with automated ones?

Communication Interface

The communication interface between the test host and DUT must be highly reliable, as interface issues are a major cause of test instability.

Wired connections improve stability, even for typically wireless setups (e.g., using cables instead of antennas for Bluetooth).

The interface architecture and protocol significantly influence the overall usability of the test framework. A dedicated test interface enhances test development ease during implementation.

Such a test interface should be protected from unauthorized access. Removing it entirely from the software release is not advisable, as it necessitates special test software, excluding tests from the actual release.

Some manual tests should supplement the automated ones since the DUT isn't tested exactly as a user would experience it.

Monitoring

Information exchange between the DUT and the test host can substitute complex automated hardware control.

For instance, for a DUT with a display, events for various displays can be forwarded to the test host along with the display software module.

This allows verification of correct display triggers, but manual tests are still needed to ensure display accuracy.

This approach enhances stability and reduces effort, though all possible displays must be manually checked.

Example

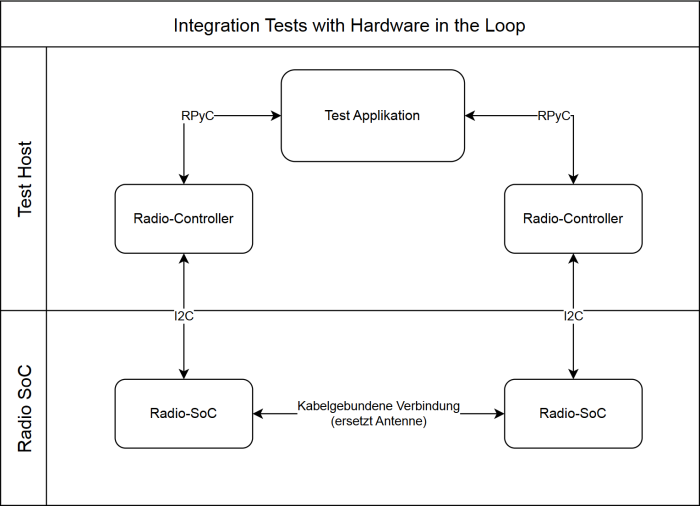

Figure 1: Block Diagram Example

The DUT consists of two hardware components. A proprietary radio SoC, which provides simple functionalities such as connection establishment and data exchange. A microcontroller is used to control the radio SoC, user interfaces, battery management, etc.

Several of these devices can be connected wirelessly to form a network.

The integration tests in this example focus on testing the radio controller, which is responsible for controlling the radio SoC. Detailed tests are particularly important for this software component, as it is a very complex part of the firmware.

The test application is written in Python and is executed on the test host. The radio controller, which normally runs on the microcontroller, is also executed on the test host. Only the radio SoC is integrated into the test system with original hardware and firmware.

Communication between the test application and the radio controller takes place via Remote Python Call (RPyC), and uses the same methods/classes as the actual firmware.

The antenna is replaced by a wired connection.

Such a test setup has various advantages:

- Python allows fast implementation of the test application. There is no need for lengthy compiling.

- As the radio controller to be tested is executed on the host, the setup time before each test run is reduced as there is no need to download the firmware.

- Omitting the microcontroller eliminates a source of error and results in more stable tests.

- The wired connection between the radio SoCs reduces interference and ensures more stable tests.

- New functionalities for the radio controller can be implemented and tested before they are integrated into the rest of the microcontroller application.

Disadvantages that have been accepted with this test setup include

- Certain errors cannot be found.

For example, due to different timings on the test host and microcontroller. Most critical timings run on the radio SoC, so this point is not critical. System tests are necessary as a supplement. - The radio connection is only tested in the (unrealistic) interference-free case. Tests in which specific faults are injected are still necessary.

Conclusion

The effort to set up and maintain automated tests is significant. Start with a simple test setup focusing on stability, usability, and testing critical software components that can't be covered by unit tests or are time-consuming to test manually.

With a solid foundation, it becomes easier to estimate the effort required to automate testing for other software/hardware parts.

Use automated tests throughout all project phases.

Hopefully, this article has provided valuable insights into successfully implementing such projects. Good luck!

Do you have questions? We’re here to offer project-specific advice, workshops, or the implementation of a test framework.

We look forward to hearing from you. Contact us here >

Nicola Jaggi

BSc BFH in Elektro- und Kommunikationstechnik

Embedded Software Engineer

About the author

Nicola Jaggi has been an embedded software engineer at CSA Engineering AG for 11 years. His focus is on the development of firmware in C++ on STM32.

He has also set up, extended and used several test automation in the past years.